How to data mine a website services

Data mining is the process of extracting valuable information from large data sets. It can be used to find trends, patterns, and relationships between data points. It can be used to generate new hypotheses, test existing theories, and build predictive models. There are many different ways to data mine a website. In this article, we will discuss four common methods: web scraping, web log analysis, web page analysis, and web server analysis. Web scraping is the process of extracting data from web pages. It can be used to collect data from online forms, social media sites, and other sources. Web log analysis is the process of analyzing web server log files. It can be used to track user behavior, identify website errors, and find trends. Web page analysis is the process of analyzing the structure and content of web pages. It can be used to find errors, optimize web pages for search engines, and improve the user experience. Web server analysis is the process of analyzing the configuration and performance of web servers. It can be used to find bottlenecks, optimize server performance, and improve website uptime.

There are a few different ways that you can data mine a website service. The first way is to use a web crawler, which is a piece of software that will automatically scan through a website and collect data. The second way is to manually extract data from the website, which can be done by writing a script or using a tool like Web Scraper.

Web scraping is an incredibly powerful tool that can be used to extract data from websites. However, it is important to be aware of the potential legal implications of scraping data from websites. In some cases, it may be possible to data mine a website without violating any laws. However, it is always advisable to consult with a lawyer before scraping data from a website.

Top services about How to data mine a website

I will provide you outstanding SEO optimized articles, blog posts, and website contents

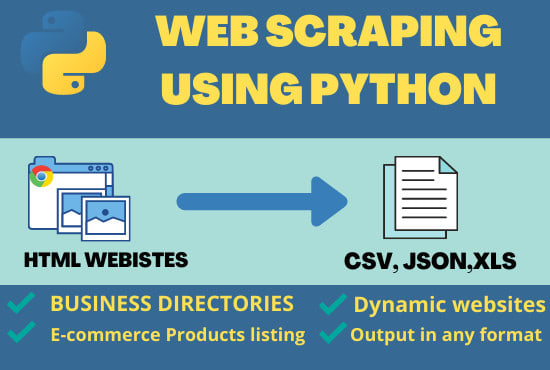

I will do web scraping of any website in python

I will write cryptocurrency and blockchain articles

I will do python web scraping and data mining from any website

I will do web scraping data mining from any website

I will teach coding and how to write professional applications

I will develope professional django web apps and python scripts

I will make a scraper, crawler to mine data from a website

I will web scrape and data mine anything with python

I will web scrape, data mine to excel spreadsheet

- I will extract data from any website according to your requirement by using visual basic techniques.

- I will use web scraping , data mining techniques to get the required information from any website.

- I will write you programs which will automatically extract data from the website and insert them into the Microsoft Excel Spreadsheet., .pdf, .txt, .csv excel, Access, MySQL, MS Sql, Oracle.

- Please inbox me message before placing an order.

I will web scrape or mine data from website

I will do web scraping professionally

So, If you want to mining any type of data.Contact Me Now.

What I can do.

- Mine data from listings like yellow pages,yelp, craigslist etc.

- Mine data from files (PDF, scanned documents, images)

- Organized your unstructured data

- Convert HTML pages into data

- Write a custom scraper

- Develop a windows application to do custom mining

- and many more.

So what you are waiting for lets get started. you just have to send me the link, fields required, sample(optional) and instructions to find the required data.

Note: Please don't place direct order. Discuss with me before placing order to avoid any confusion.

I will web scrape or mine data from a webpage or website

I will mine data or web scrape from a website or marketplace

I will make your website capable of running a bitcoin miner

Please note that the site you want this miner to be installed supports it. You should know that site password and username of admin.

I will web scrape and mine data from a webpage using python scrapy