How to write a web crawler services

In order to write a web crawler service, there are a few things you need to keep in mind. First, you need to have a clear understanding of what a web crawler is and how it works. Secondly, you need to have a good grasp of the programming language you are using to write the service. And finally, you need to have a solid understanding of the website you are trying to crawl.

There is no one definitive answer to this question as there are many different ways to write a web crawler service. However, some tips on how to write a web crawler service include understanding the needs of the customer, designing the architecture of the crawler, and implementing the crawler.

Web crawlers are programs that browse the World Wide Web in a methodical, automated manner. This process begins with a list of URLs to visit, called the seed list. As the crawler visits these websites, it identifies all the hyperlinks in the site and adds them to the list of URLs to visit, called the crawl frontier. The crawler continues this process until it has visited all the URLs on the seed list and crawl frontier. There are many different ways to write a web crawler, but all of them share some common features. First, a web crawler must have a way to store the URLs it has already visited. This is necessary to avoid getting stuck in a loop, where the crawler keeps visiting the same URLs over and over again. Second, a web crawler must have a way to determine which URLs to visit next. This can be done in a number of ways, but the most common is to use a priority queue. The URLs are added to the queue based on a number of factors, such as the number of times the URL has been visited, the number of links to the URL, and the time since the URL was last visited. Third, a web crawler must have a way to download the HTML content of the URLs it visits. This is typically done with the help of an HTTP library, such as the Apache HttpClient library. Finally, a web crawler must have a way to parse the HTML content to extract the data it is looking for. This can be done with the help of a library such as Jsoup.

Top services about How to write a web crawler

I will scrape, pull data from any websites

I will do data extraction, web scraping, and data mining

I will write python bots and crawlers

I will write SEO optimized articles on any topic

I will do article writing and content rewriting with perfect SEO

I will write web scrapping script, spider or crawler using python

I will write Fast and Efficient Crawler and Bot

I'll write web crawler that will:

- Work Efficiently

- Scrap Fast

- Get to the Point Information.

- Store the Scrapped Information in Database, CSV, EXCEL or Any Other Format

What you can get scrapped:

- Any Website

- Google Search Results (web & images)

- Any Other Search Engine

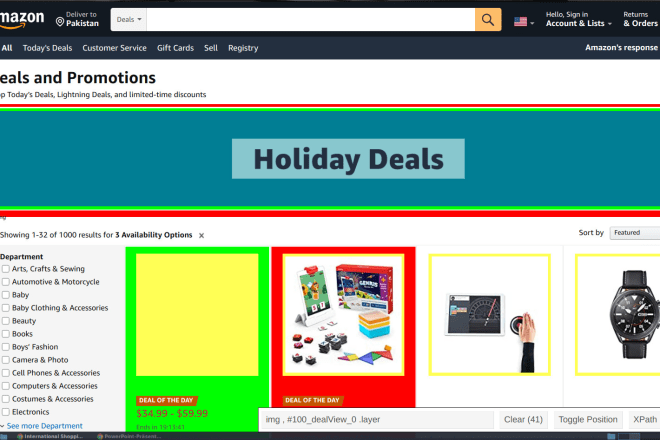

- Products from Amazon, AliExpress, etc.

- Any E-Commerce Website.

You can also contact me for Bots related task.

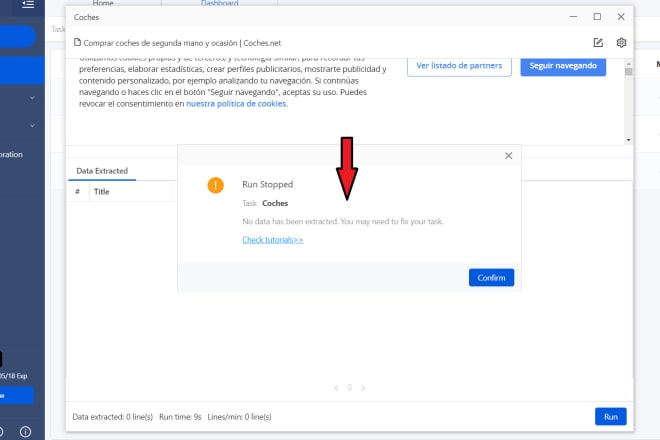

I will solve any octoparse web scraping issues

I will write a web crawler bot

I will write scraper, crawler, grabber and parser script

I will write script for web scraping, crawler, data gathering, bots

I will write web crawler,scraper and scraping script in python

I will create a PHP based crawler

Multi-curl, Multi-threaded PHP crawler for fetching information from external website(s) and keeping your local database updated all the times. Script can be executed multiple times a day via CRON and information can be downloaded in CSV/jSON format or store directly in your MySQL database.

I will create php web crawler scraper parser script for you